Masked Diffusion Models (MDMs) cannot directly compute exact log-likelihoods, take DPO as an example, we must approximate log-likelihoods using Evidence Lower Bounds:

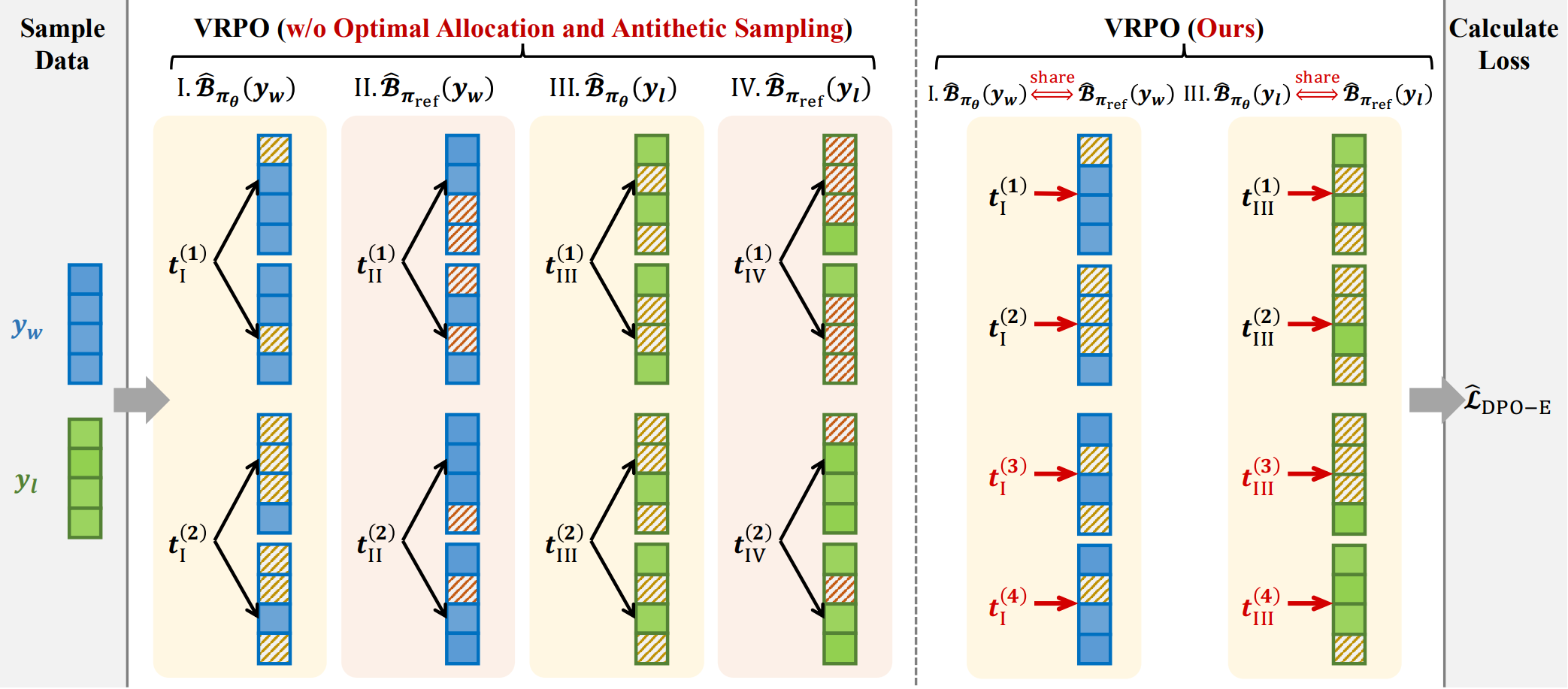

Key Challenge: ELBO estimation introduces additional variance through Monte Carlo sampling, which propagates through the nonlinear log-sigmoid function, creating both bias and variance in the loss.